Update

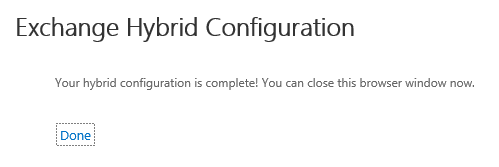

After a few weeks of mailing back and forth with Microsoft’s support, I was today (finally) able to confirm that the issue which I described below is now solved.

It seems that Microsoft rolled out a hotfix/code change for their Exchange Online service. Although, at first, I thought the issue was related to a bug in EMC for not correctly issuing all parameters when initiating a remote mailbox move, it seems the issue had more to it than that. Basically, what happened is that when MRS moved the mailbox from on-premises environment to Office 365, it wouldn’t keep the link to the already-existing archive. This caused a new (empty) archive to be created and could possibly cause data loss.

I’m happy to see what time and effort Microsoft has put into solving this issue. It proves that Microsoft is concerned about the quality of their product / service. In fact, it would surprise me if they weren’t. A bug that could cause data-loss is not really something you’d want to carry around for a long time!

Thanks to everyone involved and kudo’s to Philippe Phan Cao Bach (Sr. Escalation Engineer) who was working with me on this case.

Original Post

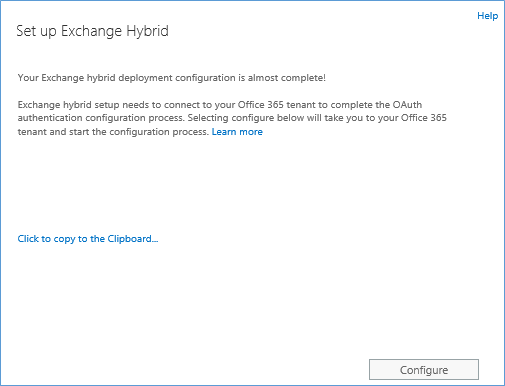

Office 365 offers great ways to enhance the functionalities of your on-premises deployment. By running the Hybrid Configuration Wizard (which Steve Goodman explains in this article) you can configure both environments to act as one; allowing you to make use of features such as e.g. Online Archives (EOA).

With Exchange Online Archives, your primary mailbox stays in your on-premises Exchange server, whereas the archive will – as the name might have given away – be hosted in Office 365. If you’re interested in finding out more about Online Archives, I suggest that you take a look at Bharat Suneja’s session at TechEd this year: “Archiving in the cloud with Exchange Online Archiving”

The problem

To me, one of the most interesting things about a hybrid deployment is the flexibility it offers. You can put a few mailboxes in Office 365, try them out and move more to the service if you like it.

If you are looking to take that approach, this information might be interesting for you!

Imagine the following: you are trying out Office 365 and decide to use Online Archives to start with. You provision the archives and life is great! After a while you decide you want to use more and you decide to move some mailboxes to Office 365. However, after your users have been moved to Office 365 they start complaining that their archive is empty.

It seems that – although this scenario is supported – there are some issues with the provisioning process when you move a user to Office 365 that previously already had an Online Archive: it get’s “wiped”. At least, that’s how it looks like.

At first, I though the data would reappear after a while, so I made sure that I waited long enough. Unfortunately even after a few days, the archives was still empty.

I decided to do some tests, to make sure this wasn’t a standalone case. Perhaps something went wrong during the move. To my surprise, tests confirmed what was going on: although the archive contained items prior to the move, they are now empty.

To explain what happens, let me describe the process I used to reproduce this issue.

This first screenshot show the details of the on-premises mailbox that has a cloud-based archive (EOA) enabled. This archive contains 4 (test) items:

![image image]()

Afterwards, I moved the mailbox through the Exchange Management Console using the “New Remote Move Request”-wizard.

Because on-premises only a mailbox exists, you don’t have the option to move an archive (which is normal):

![image image]()

The move completed successfully, and after having waited long enough (DirSync etc.). I verified the mailbox’s settings:

![image image]()

The interesting part here is that the Archive, although having the same GUID, appears to have been moved to the same database as the mailbox. Before the archive resided in database “EURPRD04DG032-db055” whereas now it’s in “EURPRD04DG030-db041”.

To ascertain myself that this wasn’t causing problems, I decided to do another test. When executing the MoveRequest, I specified to what database the archive should be moved to. I made sure that the target database of the Online Archive was set to the database it was already residing in before moving the mailbox:

New-MoveRequest “Testmivh5” –RemoteHostName “hostname.company.com” –targetdeliverydomain “tenant.mail.onmicrosoft.com” –ArchiveTargetDatabase “EURPRD04DC032-db055” –RemoteCredential (Get-Credential)

Note this cmdlet was executed from PowerShell connected to Exchange Online.

After the move completed (successfully btw), I – again – waited long enough for DirSync/replication/provisioning to occur. I deliberately didn’t force DirSync to ensure that wasn’t causing any issues either. But alas, none of that helped: the archive was again empty.

A quick look at the object’s attributes revealed that – although a target database parameter was provided – the archive still got moved to the same database as the user:

![image image]()

Then, I was thinking that the ‘old’ archive perhaps got disabled and that a new one was created. Although this would be strange since the GUID of the archive remains the same, I thought it was worth a try. Again: no joy! No disconnected mailboxes were to be found.

After all this testing, I had reasons enough to call Microsoft Support. After a few calls back and forth, they recently came back to me confirming that this is a known issue and that they’re currently working on it.

Until today I’m still not sure what the cause of the problem is. I haven’t received any feedback yet either. Of course, I will keep you posted as soon as I find out more!

Temporary workaround

It might sound too obvious, but the workaround is simple: either create both archive and mailbox in the cloud or create the (both!) on-premises first and move them together to the cloud. Both cases work just fine!

Conclusion

Although the last thing you’d want to experience is data-loss, I’m well aware that only a few customers, world-wide, would try this scenario. Nevertheless, it’s an issue that should be addressed quickly.

In our case, we have lost only a single archive worth a few hundred megabytes of emails. I can imagine that losing the wrong kind of emails might be a real big issues for some companies. I haven’t asked, but I’m pretty confident that – even though the emails seem lost – Microsoft can somehow recover the data so that you don’t really “lose” anything. I honestly cannot imagine otherwise.

Does this mean that I discourage using features like EOA? Absolutely not. I still have my hosted archive and I am pretty happy with it. Apart from some inconveniences which I will write about another time, it provides me with everything I need. Furthermore, it allows us to give everyone a relatively large archive without having to bear the costs of additional storage.

Until later!

![]()

![]()